After posting previously about dipping my toe in the Infrastructure as Code waters with Terraform, a kind individual (who requested staying nameless) asked if I’d encountered AWS’ native Cloud Deployment Toolkit (CDK). I vaguely remember seeing a Beta announcement sometime back when the toolkit was first announcement, but had discounted at the time as it wasn’t stable for production workloads. Reviewing again, I’d missed the announcement that the CDK had graduated to general availability July last year.

Whilst I was quite happy with Terraform’s performance where I’ve used it, my mantra towards cloud-based platforms is: (where possible) stay native. So I took a look at what the AWS-CDK offered, and immediately came across some excellent resources to get me rapidly up to speed.

- The CDK Workshop provides great setup guides, and without being a dev I was able to get CDK functional in my environment with minimal fuss. The workshop is helpful split and duplicated into your own (supported) language of choice (more on this benefit later). If you’ve been here before, it should be no surprise that I took advantage of the Python modules.

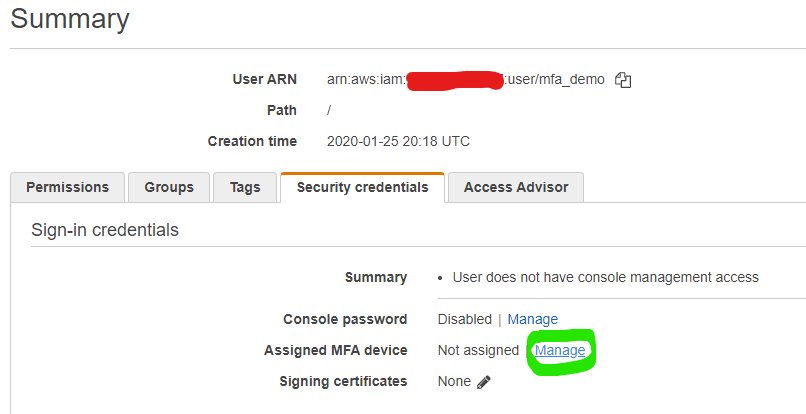

N.B. The workshop will set you with a AWS Access Keys with Administrator privileges. This may have been the trigger for yesterday’s post on protecting your keys with MFA. You may want to consider the same…. - The AWS-Samples repo should be a required bookmark for anyone working with AWS. The AWS-CDK-Examples repo is no exception, providing great real-world use cases to suggest potential architecture design patterns. As with the workshop above, the examples repo has examples across all supported coding languages.

- Buried in the above examples repo, was a link to a recorded demo from two of the CDK’s lead developers; Elad Ben-Israel and Jason Fulghum. I’d definitely recommend taking an hour to watch the dev’s leverage the power of the CDK to live-code a solution to a real-world problem, it greatly helped me get started.

(Almost) Language agnostic

In addition to being native from AWS themselves, one of the immediate values to be is that (unlike Terraform, CloudFormation, or similar) the CDK maps into common programming languages as just another set of libraries/modules. From my perspective this means I can leverage the power of IaC, whilst staying within my preferred Python; and not need to learn an additional language/syntax.

For the curious, this is enabled by the JSII to map between the toolkit and a given language’s syntax and structure, but I’ll admit this aspect gets me well outside of my coding comfort zone; I’ll just appreciate that it works. In practice this means that if Python isn’t you’re language of choice, you’ve plenty of other popular options:

Getting Started

If I’ve whet your whistle, I’d recommend you stop reading the blog of someone who is just getting to grips with the CDK himself and jump into the resources above.

If you’re still here (why? seriously, check out the links above from those that know what they’re talking about.) I’ve essentially mapped the primary commands/features I’d leveraged from Terraform into their CDK equivalents.

terraform init <==> cdk init

cdk init will do what it says on the tin: initialise your current working directory with the basic building blocks needed to start defining your architecture and pushing to the cloud.

Warning: if you’ve an existing codebase you’re planning to CDK-ify, I’d strongly recommend init’ing in a blank directory first, so you can review the changes the command will make to your existing workspace.

terraform plan <==> cdk synth

cdk synth takes the code you’ve defined in your language of choice and creates a CloudFormation stack to deploy the defined architecture, assets and configuration.

terraform plan <==> cdk diff

Diverging from Terraform’s workflow; cdk diff provides a view of your environment from the perspective of what changes are going to be made; going from your environment as it’s currently running to the future state that deploying your current CDK stack will create. Leading us to…..

terraform apply <==> cdk deploy

cdk deploy is the first command that will actually make changes to your running AWS environment. All being well, it takes the CloudFormation template developed by cdk synth and actually runs the template under CloudFormation to build, modify or remove your infrastructure as required.

terraform destroy <==> cdk destroy

Again, as it says on the tin – cdk destroy will destroy all(*) resources created and managed by it’s defined stack(s), removing them from AWS. As I stated when discussing Terraform and IaC originally; this is the key value for my interest in IAC toolkits: confidence that at the end of a session I can tear down the infrastructure I’ve been using and (hopefully) not get hit with a nasty AWS bill if I forget about service $x and leave it running for a few weeks.

(* As a found with Terraform, S3 buckets (and I suspect other data services) don’t get removed by the various destroy commands if they’ve been populated with any date after being instantiated – you have been warned…..)

First impressions…

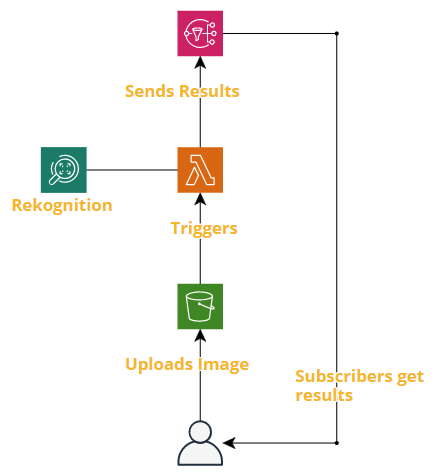

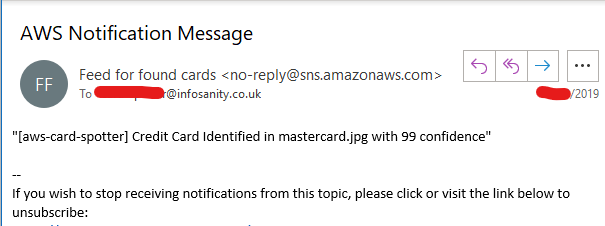

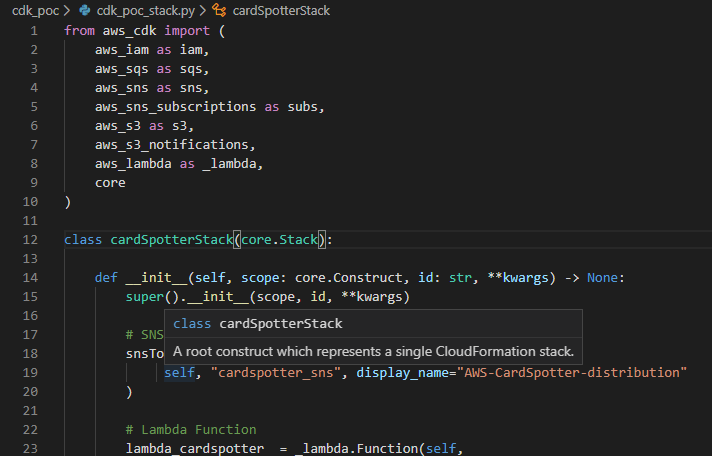

…very good. As I did with Terraform, I took a proof of concept of using CDK to deploy my little microservice for automatically spotting credit cards in images. This was something I was able to achieve with a few hours of learning, research, and trial and error. Will likely cover this separately shortly….

With Terraform I found the use-case of a single Lambda function and peripheral services harder than expected, the code for Lambda needed to be packaged manually as an archive prior to diving into the various *.tf configuration files. Admittedly not the end of the world once the process flow was known, but I did found it broke workflow and felt a little cumbersome.

With CDK this pain point was completely removed. When defining the Lambda resource in the CDK’s stack configuration, you simply pass the code parameter the directory containing your lambda function (and required libraries, if necessary), and CDK will do the rest on a deploy. For example, literally just:

example_function = aws_lambda.Function(self, "cardspotterLambda",code=_lambda.Code.asset("./lambda/"), some_other_params....)Teaser for things to come…..

—

Andrew