Apologises for the break in regular postings, I was caught by surprise when I realised that it had been over a month since the last InfoSanity post. Unfortunately I haven’t won the lottery and been living in the lap of luxury, just real life and work getting in the way of extra curricula activities.

Normal service should now be resuming shortly.

— Andrew Waite

Month of PHP bugs 2010

Following in the now well-established form of a ‘Month of X Bugs’ php-security.org has just opened it’s call for papers for a second month, to update and expand on it’s successful run month in 2007.

I’ll admit that I largely ignored the original Month of PHP Bugs (MOPB), at the time I had just made the decision to stop coding in PHP and try a more mature language. I had found PHP to be a very simple language to learn and code it, but as a result I also found it very simple to code very badly in as well. (and I’ve since found that a bad coder can code badly in any language, hence why I gave up the career path of developer).

However, this month’s SuperMondays event changed my perspective slightly. Lorna Jane gave a great presentation on using PHP to provide a web services architecture, at first glance looks like PHP has improved and matured significantly since I last used it. For those interested Lorna’s talk was recorded and is available here, and Lorna’s own take on the event can be found here.

So while I’m not in a position to contribute to the month’s releases, I will be paying closer attention to the resources released this time around. If you think you can contribute the organizers have posted a list of accepted topics:

Accepted Topics/Articles

- New vulnerability in PHP [1] (not simple safe_mode, open_basedir bypass vulnerabilities)

- New vulnerability in PHP related software [1] (popular 3rd party PHP extensions/patches)

- Explain a single topic of PHP application security in detail (such as guidelines on how to store passwords)

- Explain a complicated vulnerability in/attack against a PHP widespread application [1]

- Explain a complicated topic of attacking PHP (e.g. explain how to exploit heap overflows in PHP’s heap implementation)

- Explain how to attack encrypted PHP applications

- Release of a new open source PHP security tool

- Other topics related to PHP or PHP application security

[1] Articles about new vulnerabilities should mention possible fixes or mitigations.

And prizes are available for the best submissions:

| # | Prize |

|---|---|

| 1. | 1000 EUR + Syscan Ticket + CodeScan PHP License |

| 2. | 750 EUR + Syscan Ticket |

| 3. | 500 EUR + Syscan Ticket |

| 4. | 250 EUR + Syscan Ticket |

| 5.-6. | CodeScan PHP License |

| 7.-16. | Amazon Coupon of 65 USD/50 EUR |

So what are you waiting for? Get contributing…

–Andrew Waite

Book Review: Virtualization for Security

After having this on my shelf and desk for what seems to be an eternity, I have finally managed to finish Virtualization for Security: Including Sandboxing, Disaster Recovery, High Availability, Forensic Analysis and Honeypotting. Despite having one of the longest titles in the history of publishing, it is justified as the book covers a lot of topics and subject matter. The chapters are:

After having this on my shelf and desk for what seems to be an eternity, I have finally managed to finish Virtualization for Security: Including Sandboxing, Disaster Recovery, High Availability, Forensic Analysis and Honeypotting. Despite having one of the longest titles in the history of publishing, it is justified as the book covers a lot of topics and subject matter. The chapters are:

- An Introduction to Virtualization

- Choosing the right solution for the task

- Building a sandbox

- Configuring the virtual machine

- Honeypotting

- Malware analysis

- Application testing

- Fuzzing

- Forensic analysis

- Disaster recovery

- High availability: reset to good

- Best of both worlds: Dual booting

- Protection in untrusted environments

- Training

Firstly, if you’re not security focused don’t let the title put you off picking this up. While some of the chapters are infosec specific a lot of the material is more general and could be applied to any IT system, the chapters on DR, HA and dual booting are good examples of this.

Undoubtedly the range of content in the book is one of it’s biggest draws, I felt like a kid in a sweet shop when I first read the contents and had a quick flick through, I just couldn’t decide where to start. This feeling continued as I read through each chapter, different ideas and options that I hadn’t tried were mentioned and discussed, resulting in me scribbling another note to my to-do list or putting the book down entirely while I turned my lab on to try something.

The real gem of information that I found in the book was under the sandboxing chapter, which was one of the topics that persuaded me to purchase the book in the first place. Considering that one of the books authors is Cartsten Willems, the creator of CWSandbox it shouldn’t be too surprising that this chapter covers sandboxing well. The chapter also covers creating a LiveCD for sandbox testing, while very useful for the context it was explained in, it was one of several parts to the book where by brain started to hurt from an overload of possible uses.

As you might have already guessed, the range of topics is also one of the books biggest weaknesses. There just isn’t enough space to cover each topic in sufficient depth. I felt this most in the topics that I’m more proficient with, while the Honeypotting chapter does a great job of explaining the technology and methodology but I was left wanting more. The disappointment from this was lessened on topics that I have less (or no) experience with as all the material was new.

Overall I really liked the book, it provides an excellent foundation to the major uses of virtualisation within the infosec field, and perhaps more importantly leaves the reader (at least it did with me) enthusiastic to research and test beyond the contents of the book as well. The material won’t help you become an expert, but if you want to extend your range of skills there are definitely worse options available.

–Andrew Waite

Random 419

I want to say thank you to everyone who has supported this site and blog, but it is closing down as I am now rich thanks to the Central Bank of Nigeria. No, seriously, they sent me an email and everything….

Okay, maybe not, but it’s a while since I’ve seen a 419 (advance fee fraud) slip through to my inbox so thought I’d share. Originally I hand planned to critique different parts of the email, but I still can’t believe people fall for these so instead I’ll just share the ‘wealth’ for all.

This is to congratulate you for scaling through the hurdles of screening by the board of directors of this payment task force. Your payment file was approved and the instruction was given us to release your payment and activate your ATM card for use.

The first batch of your card which contains 1,000.000.00 MILLION U.S. DOLLARS has been activated and is the total fund loaded inside the card. Your fund which is in total 10,000.000.00 MILLION U.S. DOLLARS will come in batches of 1,000.000.00 MILLION U.S. DOLLARS and this is the first batch.

Your payment would be sent to you via UPS or FedEx, Because we have signed a contract with them which should expired by MARCH 30th 2010 Below are few list of tracking numbers you can track from UPS website(www.ups.com) to confirm people like you who have received their payment successfully.

JOHNNY ALMANTE ==============1Z2X59394198080570

CAROL R BUCZYNSKI ==============1Z2X59394197862530

KARIMA EMELIA TAYLOR ==============1Z2X59394198591527

LISA LAIRD ==============1Z2X59394196641913

POLLY SHAYKIN ==============1Z2X59394198817702

Good news, We wish to let you know that everything concerning your ATM CARD payment despatch is ready in this office and we have a meeting with the house (Federal government of Nigeria) we informed them that your fund should not cost you any thing because is your money (Your Crad). Moreover, we have an agreement with them that you should pay only delivering of your card which is 82 U.S. DOLLARS by FedEx or UPS Delivering Company.

However, you have only three working days to send this 82 U.S. DOLLARS for the delivering of your card, if we don’t hear from you with the payment information; the Federal Government will cancel the card.

This is the paying information that you will use and send the fee through western union money transfer.

Name: IKE NWANFOR

Address: Lagos-Nigeria

Question: 82

Answer: yes

I wait the payment information to enable us proceed for the delivering of your card.

Tunde.

Do I really need to suggest anyone ignore similar opportunities that they may reach their inbox?

Additionally if you want to find out more, or a good laugh at the expense of these ‘con-men’ take a trip over to the excellent 419Eater site, these guys (and gals) do great work.

–Andrew Waite

Direct Access at NEBytes

Tonight was the second NEBytes event, and after the launch event I was looking forward to it. Unfortunately the turn out wasn’t as good as the first event, 56 were registered but I only counted approximately 22 in the audience. The topic I was most interested in was a discussion of Microsoft’s Direct Access (DA), this was billed as an ‘evolution in remote access capabilities’. Being a security guy, obviously this piqued my interest.

Tonight’s speaker covering DA was Dr Dan Oliver, managing director at Sa-V. Before I start I want to state that I have/had no prior knowledge of DA, and my entire understanding comes from the presentation/sales-pitch by Dan tonight, if anyone with more knowledge once to point out any inaccuracies in my understanding or thoughts I’d more than welcome getting a better understanding of the technology.

DA is an ‘alternative’ to VPNs (discussed more later) for a Microsoft environment. The premise is that it provides seamless access to core resources whether a user is in the office or mobile. The requirements are fairly steep, and as Dan discussed on several occasions may be a stumbling block for an organisation to implement DA immediately. These are (some of) the requirements:

- At least one Windows 2008 R2 server for AD and DNS services

- A Certificate Authority

- Recent, high-end client OS: Windows 7, Ultimate or Enterprise SKU only.

- IPv6 capable clients (DA will work with IPv6 to IPv4 technologies)

As few organisations have a complete Win7 roll-out, and even less have the resources available to roll-out the higher end versions Dan was asked why the requirement. Answer: ‘Microsoft want to sell new versions, sorry’.

With DA pitched as an alternative to VPN at numerous points in the presentation the was a comparison between the two solutions, and to me the sales pitch for DA seemed schizophrenic. Dan kept switching between DA being an improvement to the current VPN solutions completely, and DA being suitable for access to lower priority services and data but organisation may prefer to remain with VPNs for more sensitive data. At this point I couldn’t help thinking ‘why add DA to the environment if you’re still going to have VPN technologies as well’. This was especially the case as Dan stated (and I can’t verify) that Microsoft do not intend to stop providing VPN functionality in their technologies.

From a usability and support perspective DA is recommended as it does not require additional authentication to create a secure connection to ‘internal’ services. Apparently having to provide an additional username/password (with RSA token/smartcard/etc.) needed to establish a VPN connection is beyond the capabilities of the average user.

One aspect that I did agree with (and if you listen to Exotic Liability you will be familiar with) is the concept of re-perimeterisation. The concept that the traditional perimeter of assets internal to a firewall is no longer relevent to protect resources in the modern environment, and that the modern perimeter is where data and users are, not tied to a particular geographical location or network segment. However, rather than the perimeter expending to encorporate any end user device that may access or store sensitive data, Dan claimed that DA would shrink the perimeter to only include the data centre, effectively no longer being concerned with the securityof the client system (be it desktop, laptop, etc.).

This point made me very concerned for the model of DA, if the client machine has seamless, always on access to ‘internal’ corporate services and systems I would be even more concerned for the security of the end user machine. If a virus/trojan/worm infects the system with the same access as the user account, then it too has seamless, always on access to the same internal services. I’m hoping this weakness is only my understanding of the technology, seems like a gaping whole in technology. If anyone can shed any light on this aspect of DA I’d appreciate some additional pointers to help clear up my understanding.

At this point I still can’t see an advantage to implementing DA over more established alternatives, my gut feeling is that DA will either become ubiquitous over the coming years or disappear without making an impact. Due to the fact it doesn’t play nice with the most widely implemented MS technologies, let alone ‘nix or OSX clients and the strict requiremented making a roll-out expensive I expect it to be the latter, but I’ve been wrong before.

At this point I decided to make a speedy exit from the event (after enjoying some rather good pizza) as the second event was dev based (Dynamic consumption in C# 4.0, Oliver Sturm) and I definitely fit in the ‘IT Pro’ camp of NEBytes audience.

Dispite my misgivings from the DA presentation I still enjoyed the event and look forward to the next. If you were at either of the events please let the organisers know your thoughts and ideas for future events by completing this (very) short survey. Thanks Guys.

— Andrew Waite

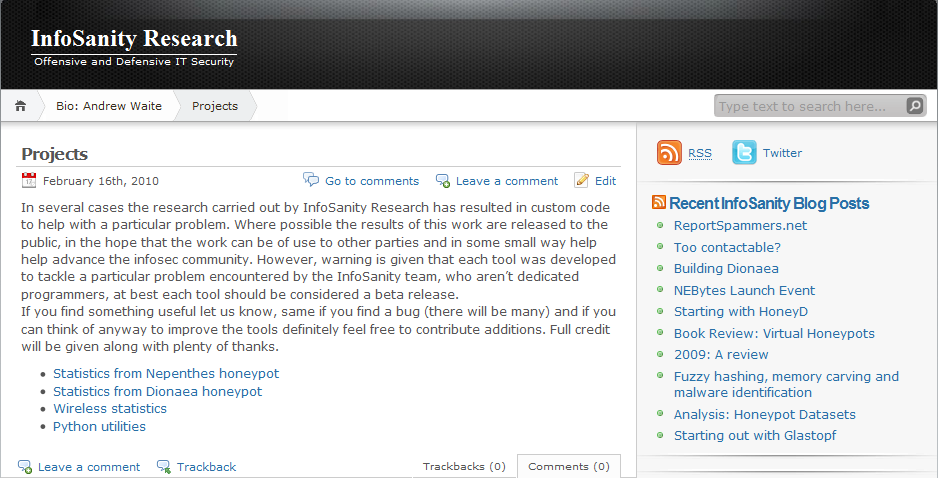

New Projects Section

The core InfoSanity site has just (last 24hours) had the first of several planned refreshes go live. In this case it is a section of the site dedicated to the code and tools released as part of the research carried out by InfoSanity. No new content yet, but it has served as a nice reminder of some of the intended features still incomplete in existing projects, hopefully updates should be coming soon.

The start of the section can be found here, alternatively just navigate from the site’s menu. For those feeling lazy, a sneak peek:

— Andrew Waite

ReportSpammers.net

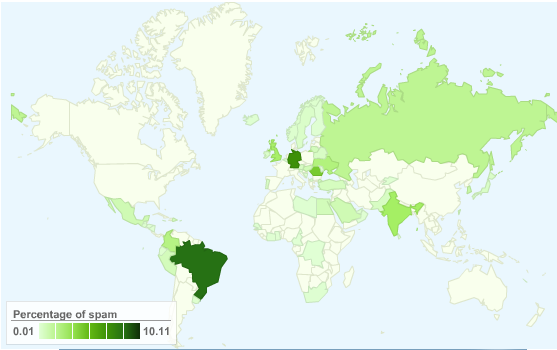

I was recently pointed towards www.reportspammers.net, which is a good resource for all things spam related and is steadily increased the quantity and quality of the information available. As much as I like the statistics that can be gathered from honeypot systems, live and real stats are even better and the data utilised by Report Spammers is taken from the email clusters run by Email Cloud.

One of the first resources released was the global map showing active spam sources (static image below), it is updated hourly and the fully interactive version can be found here.

In addition to the global map, Report Spammers also lists the most recent spamvertised sites seen on it’s mail clusters. I’m undecided with the ‘name and shame’ methodoly due to the risk of false postives, but if your looking for examples of spamvertised sites it will prove a good resource (and one I intend to delve deeper into next time I’m bored). Just beware, sites that actively advertise via spam are rarely places that you want to point you home browser at, you have been warned.

If you are wanting a resource to explain spam and the business model behind it Report Spammers could be a good starting point. It even has the ability to explain spam to non-infosec types that still think spam comes in tins. Keep this in mind next time you need to run another information security awareness campaign.

— Andrew Waite

Too contactable?

Yesterday I got curious:

My initial count was five, but released I missed some with the responses that I received. In a ‘general’ sorting of most common to least from my (admittedly small) sample set, the available contact methods are:

- Email (always multiple accounts per person)

- Instant Messaging (MSN, AOL, etc.) (usually multiple per person)

- Twitter (often multiple accounts)

- Skype

- VoiP

- IRC

- Google Wave

Which seems to be a lot, and from the responses I seem to be behind the curve in contactability. All this makes me wonder, in a world where outdated and not updated client applications are a growing intrusion vector do we really need all these ways for people and systems to communicate with us?

While you’re thinking, are they any of your communication tools that you could do without? If you stopped signing into MSN (for example) would you lose contact with anyone who couldn’t contact you via a different communication channel?

I’m not sure if there is a purpose to these thoughts or the very unscientific findings, but I’ve been thinking about this for a while so thought I’d share.

</ramblings>

— Andrew Waite

P.S. thanks to those who participated, you know who you are.

Building Dionaea

As part of a new and improved environment I’ve just finished building up a new Dionaea system. Despite the ease at which I found the install of my original system I received a lot a feedback that others had a fair amount of difficulty during system build. So this time around I decided to pay closer attention to by progress to try and assist others going through the same process.

Unfortunately I’m not sure I’m going to be able to offer as many pearls of wisdom as I originally hoped as my install went relatively smoothly. Only real problem I hit was that after following Markus’ (good documentation) my build didn’t correctly link to libemu. Bottom line, keep an eye on the output of ./configure when building Dionaea. In my case the parameters passed to the configure script didn’t match my system so needed to be modified accordingly.

On the off chance that it’s of use to others (or I forget my past failures and need a memory aid) my modified ./configure command is below:

./configure \ --with-lcfg-include=/opt/dionaea/include/ \ --with-lcfg-lib=/opt/dionaea/lib/ \ --with-python=/opt/dionaea/bin/python3.1 \ --with-cython-dir=/usr/bin \ --with-udns-include=/opt/dionaea/include/ \ --with-udns-lib=/opt/dionaea/lib/ \ --with-emu-include=/opt/dionaea/include \ --with-emu-lib=/opt/dionaea/lib/ \ --with-gc-include=/usr/include/gc \ --with-ev-include=/opt/dionaea/include \ --with-ev-lib=/opt/dionaea/lib \ --with-nl-include=/opt/dionaea/include \ --with-nl-lib=/opt/dionaea/lib/ \ --with-curl-config=/opt/dionaea/bin/ \ --with-pcap-include=/opt/dionaea/include \ --with-pcap-lib=/opt/dionaea/lib/ \ --with-glib=/opt/dionaea

— Andrew Waite

<update 20100606> New Dionaea build encountered a problem with libemu, ./configure above has been edited to reflected additional changes I required to compile with libemu support. </update>

NEBytes Launch Event

Last night (2010-01-20) I had the pleasure of attending the launch event for NEBytes.

North East Bytes (NEBytes) is a User Group covering the North East and Cumbrian regions of the United Kingdom. We have technical meetings covering Development and IT Pro topics every month. About

SharePoint 2010

The launch event was done in conjuction with the Sharepoint User Group UK (SUGUK), so was no surprise when the first topic of the night covered Sharepoint 2010, delivered very enthusiastically by Steve Smith. I’ve got no experience with Sharepoint so can’t comment too much on the material, but from the architectural changes I got the impression that it 2010 may be more secure that previous versions as the back-end is becoming more segmented, with different parts of the whole have discrete, dedicated databases. While it might not limit the threat of a vulnerability, it should be able to reduce the exposure in the event of a single breach.

Steve also highlight that there is some very granular accountability logging, in that every part of the application and every piece of data recieves a unique ‘Correlation ID’. The scenarios highlighted suggested that this allows for indepth debugging to determine the exact nature of a crash or system failure, by the same system this should allow for some good forensic pointers when investigating a potential compromise or breach.

Again viewing the material from a security stand point I was concerned that the defaults that appeared as part of Steve’s walkthrough defaulted to less secure options, NTLM authentication not Kerberos and non encrypted communication over SSL. One of Steve’s recommendations did concern me, to participate in the Customer Experience Improvement Program. While I’ve got no evidence to support it, I’m always nervous about passing debugging and troubleshooting information to a third party, never know what information might get leaked with it.

Silverlight

Second session of the night was Silverlight, covered by Mike Taulty (and should be worth pointing out that this session came after a decent quantity of freely provided pizza and sandwiches). As with Sharepoint I had no prior experience of Silverlight other than hearing various people complain about it via twitter, so found the talk really informative. For those that don’t know, Silverlight is designed to be a cross-browser and cross-platform ‘unified framework’ (providing your browser/platform supports Silverlight…)

From a developer and designer perspective Silverlight must be great, the built in functionality provide access to capabilities that I could only dream about when I was looking at becoming a dev in a past life. The intergration between Visual Studio for coding and Blend for design was equally impressive.

Again I viewed the talk’s content from a security perspective. Mike pressed on the fact that Silverlight runs within a tightly controlled sandbox to limit functionality and provide added security. For example the code can make HTTP[S] connections out from the browsing machine, but is limited to the same origin as the code or cross domain code providing the target allows cross domain from the same origin.

However, Silverlight applications can be installed locally in ‘Trusted’ mode, which reduces the restrictions in place by the sandbox. Before installing the app, the sandbox will inform the user that the app is to be ‘trusted’ and warn of the implications. This is great, as we all know users read these things before clicking next when wanting to get to the promised videos of cute kitties… I did query this point with Mike after the presentation and he, rightly, pointed out that any application installed locally would have the ability access all the resources that aren’t protected when in trusted mode. I agree with Mike, but I’m concerned that average Joe User will think ‘OK, it’s only a browser plugin’ (not that this is the case anyway) where they might be more cautious if a website asked them to install a full blown application. Users have been conditioned to install plugins to provide the web experience they expect (flash etc.)

Hyper-V

The final talk was actually the one I was most interested in at the start of the night, and was presented by James O’Neil. In the end I was disappointed, unlike the other topics I didn’t get too much that was new to me from the session, I’m guessing because virtualisation solutions are something I encounter on a regular basis. Only real take-away from the talk was the James gets my Urgh! award for using the phrase ‘private cloud infrastructure’ without cracking a smile at the same time.

Summary

The night was great, so a big thanks to the guys that setup and ran the event (with costs coming out of their own pockets too). The event was free, the topics and speakers were high quality and to top it off there were some fairly impressive give aways as well, from the usual stickers and pens to boxed Win7 Ultimate packs.

If you’re a dev or IT professional, I’d definitely recommend getting down to the next event.

— Andrew Waite