After posting previously about dipping my toe in the Infrastructure as Code waters with Terraform, a kind individual (who requested staying nameless) asked if I’d encountered AWS’ native Cloud Deployment Toolkit (CDK). I vaguely remember seeing a Beta announcement sometime back when the toolkit was first announcement, but had discounted at the time as it […]

Search results for:

Clouds in BlackHat's conference

Being the other side of the pond I wasn’t able to attend Black Hat, but I have been keeping a keen eye on the posted conference materials and talk recordings being released after the conference’ close. As I’ve recently been researching the latest buzz of Cloud Computing, naturally I was initially drawn to the talks […]

CloudCamp Lightening talks

Last week’s CloudCamp in Newcastle started of with a series of lightning talks, five minutes on a topic of the speakers choice.

CloudCamp sound bites

Whilst I research further I thought I’d share some of the comments and soundbites (mostly paraphrased) a took a note of during the CloudCamp NE event.

Initial thoughts from CloudCamp

Tonight was the second CloudCamp event in the North East of England, and my first serious look at cloud computing. I really enjoyed the event and believe I recieved excellent value from attendence, so thanks to all those who helped run the event, presented and discussed aspects of the field with me during the breakout sessions.

Automating infrastructure code audits with tfsec

Unless you’ve been living under a rock for the last few years, you’ll know a few things about the Cloud: Functionality and capabilities released by Cloud vendors are expanding at an exponential rate. DevOps paradigm is (seemingly) here to stay – the several cold days of building physical hardware sat on the floor of a […]

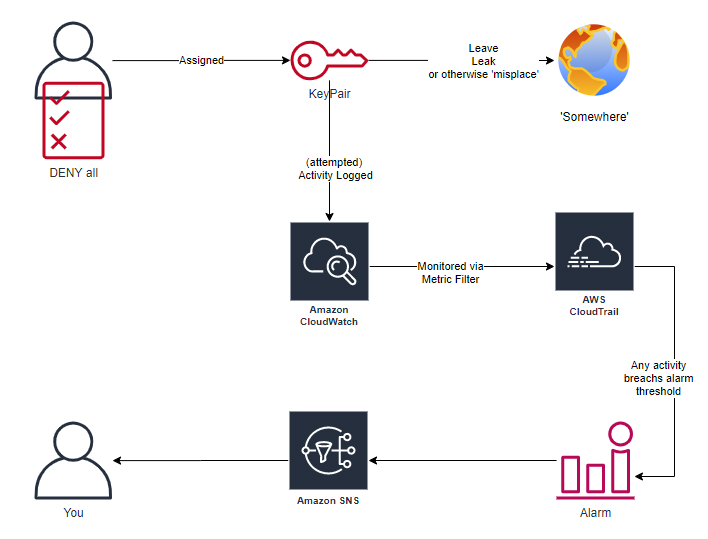

AWS HoneyUsers

Deception technology and techniques are having a resurgence, expanding beyond the ‘traditional’ high/low- interaction honeypots, into honeyfiles, honeytokens and (as you may have guessed from title) honeyusers. Today is the culmination of a “what if?” idea I’d been thinking for years, actually started working on earlier in the year (but then 2020 happened), but is […]

AWS CLI – MFA with aws-vault

Previously I’ve covered why it’s important to protect AWS Key Pairs, how to enforce MFA to aid that protection, and how to continue working with the key pairs once MFA is required. If you missed the initial article post, all is available here. Everything in that article works, but as with a lot of security […]

DC44191 – AWS Security Ramblings

In the last week of August, in the middle of Summer vacation, I had the honour of being asked to give a presentation at the second meeting of the newly formed DC44191 in (virtual, for now) Newcastle. Local DefCon groups are an offshoot of the long running, DefCon conference (usually) hosted in annually in Las […]

AWS CLI – Forcing MFA

If you’re planning on using AWS efficiently, you’re going to want to automate with the CLI, various SDKs and/or the relatively newly released Cloud Development Kit (AWS-CDK). This typically requires an access key pair, providing access to your account, and in need of being secured against abuse. Adding MFA capabilities to the account reduces a […]